Sinking warships with “kamikaze”-like strikes, attacking critical infrastructure, and “swarming” together to overwhelm enemy defenses, Ukraine’s Magura drone boats have had success countering Russian naval forces in the Black Sea — despite its Navy’s markedly limited resources.

These autonomous maritime vessels are having a moment, and the Pentagon and weapons industry alike want in on it. Flush with cash from venture capitalists and, increasingly, the DoD, which has awarded hundreds of millions in contracts to this end, defense-tech start-ups, including Saronic, BlackSea, and Blue Water Autonomy, have been building a new generation of myriad autonomous and semi-autonomous maritime vessels.

Such vessels range from row-boat size drones, to hundreds-of-feet-long larger unmanned vessels (Large Unmanned Surface Vehicles, or LUSVs), to submarines. The diversity of these vessels, from tactical surface craft to strategic underwater systems, boasts various capacities, including tracking and patrolling, carrying cargo and payloads — and increasingly, exercising lethal capacities within kinetic contexts, like firing lethal payloads, carrying out anti-missile defense, or even throwing themselves at targets to attack them, where a human in the loop could remotely carry out such tasks from afar.

Proponents tout this new generation of autonomous and semi-autonomous vessels as a way to engage militarily without risking human lives, and meet the Navy’s current fleet needs — all at a lower cost than manned ships. But between ongoing technical challenges building the vessels, AI’s tenuous track record as a military tool, and concerns that such systems’ presence in hostile waters could more easily spur conflict escalation, ongoing efforts to procure autonomous maritime vessels must proceed carefully to ensure their proliferation does not cause more harm than good.

Autonomous fleets: low-cost, low-risk?

Proponents depict myriad AI-powered vessels as a way to engage militarily without risking human lives, and at a lower cost than manned ships, while meeting other naval needs.

To this end, RAND analysts Kanna Rajan and Karlyn Stanley write that uncrewed vessels could take on potentially dangerous missions during conflict, such as delivering supplies, keeping armed service members out of harm’s way.

Dan Grazier, Senior Fellow and Director of the National Security Reform Program at the Stimson Center, told RS that autonomous vessels could meet specific needs in case of kinetic engagement. For example, they could offer a way to break through enemy attempts at area denial: an enemy's weapons, such as long-range missiles, that would normally deter U.S. ships, would not pose the same threat to uncrewed ships that are seen as disposable.

Grazier also told RS that uncrewed systems could cheaply alleviate the Navy’s ongoing fleet deficit, caused by its poor procurement track record.

“The Navy fleet is actually shrinking… mostly because of failed major shipbuilding programs, like the Littoral Combat Ship (LCS) and the Zumwalt-class programs,” Grazier said. “If we have American companies that are building effective uncrewed systems that could supplant the big, major manned shifts, then… that might go quite a long way towards solving the fleet size problem.”

Unintended consequences loom large

But other practical issues plague these autonomous vessels’ roll-out — as do a host of ethical and security-related ones.

First, feasibility challenges persist: technical issues, in tandem with human error, led to small autonomous American vessels crashing during recent tests. And although smaller autonomous vessels have already seen combat in the Black Sea, Medium and Large Unmanned Surface Vehicles (LUSVs), which have been in development for years, might altogether be a harder challenge for America’s defense industrial base.

“They've been working on the USV and the LUSVs for like 10 years now,” Michael Klare, professor emeritus of peace and world security studies at Hampshire College and a Senior Visiting Fellow at the Arms Control Association, told RS. “They've had trials. They've had them do exercises, elaborate exercises, and they… are still having difficulty getting the AI systems to work, so that these ships can operate autonomously,” he said. “The technology [for LUSVs] has not yet been developed far enough for them to be deployed with real forces in a real combat situation.”

If deployed in hostile waters, experts told RS that these vessels’ presence could escalate tensions amid already precarious geopolitical conditions. This might be especially true in the South China Sea, recently the site of several maritime confrontations.

“Defense officials in China have to worry about worst-case scenarios…and that makes everything a lot more [volatile] in a conflict,” Klare told RS. “They don't know what [adversaries’] unmanned vessels are doing, and [they may not] necessarily know where they are. They have to assume the worst,” he said. “In a crisis it's harder to keep control over the pace of escalation…you could have unintended escalations occurring.”

Stop Killer Robots spokesperson Peter Asaro questioned autonomous vessels’ ability to correctly discern civilian from military ships.

But this misidentification risk is already at play: back in February, the Navy deployed autonomous Saildrone Voyagers, unmanned surface vessels (USVs) for counter-narcotics surveillance in the Caribbean, where the Trump administration has subsequently ramped up a campaign against so-called “narco-terrorists.” Here, if a USV were to confuse civilians for narcotics smugglers, it follows that mistake could lead to U.S. forces attacking them, thus inadvertently escalating already-sky high tensions in the region.

And these vessels’ proliferation could have other consequences. For example, Klare and Asaro told RS that the proliferation of autonomous submarines within military contexts, could work to destabilize countries’ existing nuclear arms control regimes.

"If the [adversaries’] submarines become detectable and can be tracked in real time [by autonomous submarines], then you erase a country's invulnerable second-strike capabilities” based underwater, Klare said. “In a crisis, a country that feels threatened might decide to use its nuclear weapons first — before it comes under attack.”

Zooming out, experts spoke to concerns about the proliferation of AI in weapons systems within targeting contexts, where autonomous systems, rather than humans, might be entrusted to make life-or-death decisions.

To date, most autonomous vessels’ lethal capacities have been designed with a human in the loop, where a person involved would be remotely making decisions that kill. But, considering military officials and defense tech company higher-ups have repeatedly pushed to remove that human, saying their slow decision making could inhibit AI-machines’ warfighting capacities, this could change.

Ultimately, observers warn humanity surrendering this final decision-making authority to machines, in maritime autonomous vessels and other autonomous weapons systems, crosses a fundamental ethical threshold.

"I don't have a problem with remote-controlled weapons,” Dan Grazier told RS. “I do worry about autonomy [in this context]. I don't like the idea of a machine making a life-or-death decision…as a society, as a species, [we] need to figure this out — sooner, rather than later."

Ultimately, if autonomous maritime vessels are to help, rather than harm, they must be procured with these practical and ethical concerns at the forefront — lest intentions of saving armed service members’ lives at sea create a more precarious future of war for those on land.

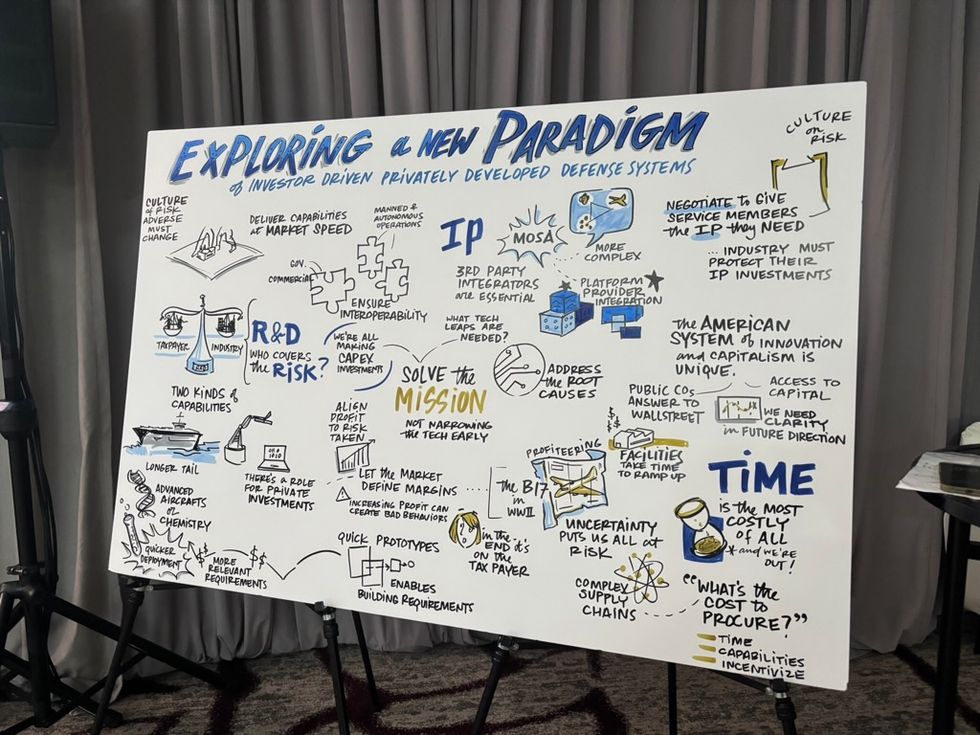

(Shana Marshall)

(Shana Marshall)