At a swanky Tuesday night event in Washington, DC, defense tech start-up ShieldAI announced what it describes as the world’s “first autonomous fighter jet.”

The stealth plane, known as the X-BAT, “has the ability to organically close its own kill chains without reliance on third party assets,” bragged ShieldAI executive Armor Harris, adding that it can carry “the full arsenal of air-to-air, surface strike, [and] air-to-ground weapons.”

The goal, in Harris’s view, is to create a weapon so effective that it scares American adversaries into submission. “We believe that the greatest victory requires no war because our adversaries realize that we have a decisive advantage,” he told a crowd of politicians and defense industry types.

The X-BAT has yet to undergo field testing, so many of its capabilities remain hypothetical. But if they’re half as good as ShieldAI claims, then the aircraft represents a major step forward in military technology — and presents serious questions about the use of AI in decisions about who lives and dies on the battlefield.

The plane, which ShieldAI hopes will enter production in 2029, has a few unique features. One is its ability to vertically launch and land on easily portable vehicles and ships. “If you're stationary in this day and age, you're a target,” said ShieldAI president Brandon Tseng. “Air power without runways is truly the holy grail of deterrence.”

Of course, vertical takeoff is much more easily said than done. Planes like the F-35 and V-20 Osprey boast vertical launch abilities but have faced countless maintenance problems due to their highly complex designs. ShieldAI’s other vertical takeoff vehicle, known as the V-BAT, had to be grounded last year after it malfunctioned during landing and injured a U.S. service member in a grisly incident. But ShieldAI believes it has overcome both of these problems by designing the aircraft to take off like a rocket and land without any direct physical assistance from soldiers.

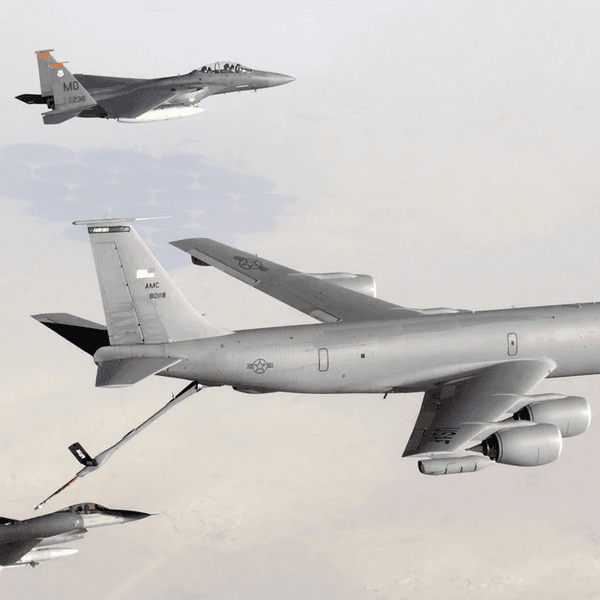

ShieldAI says the aircraft will have a range of more than 2,000 nautical miles. The startup, which is valued at $5.3 billion, did not reveal the X-BAT’s top speed, but Harris said it would be one of the “fastest things in the sky.” The company didn’t say which federal contract it hoped to earn with the X-BAT, though executives compared it to the Collaborative Combat Aircraft (CCA), which is set to be developed alongside the F-47 fighter jet.

The biggest selling point of the X-BAT is its autonomy. The U.S. military has already used ShieldAI’s Hivemind AI system to autonomously pilot planes in tests, including one in which former Air Force Secretary Frank Kendall sat in the pilot’s seat. Now, ShieldAI is hoping to deploy that technology in its own fighter jet.

“X-BAT can operate collectively with other manned assets, but as the capability and the autonomy grow, it actually doesn't need to,” Harris said. Standing in front of an image that appeared to portray a U.S.-China war over Taiwan, the executive pitched a hypothetical mission in which X-BAT would be “assigned a box of airspace” to defend. “If it detects anything that doesn't pass the friend or foe check, it'll automatically engage it according to what the rules of engagement are,” Harris said.

Asked by RS to clarify his position on autonomous weapons, Harris said that ShieldAI believes “a human should be on the loop, at least, for every offensive kill decision.” (In military parlance, “on the loop” means that a soldier would watch the system and override its lethal decisions if necessary.) Tseng, who served in combat roles in the Navy, added that, “having made the moral decision about uses of lethal force on the battlefield, I fundamentally believe that should only be made by humans.”

But it remains unclear what level of oversight this would represent in practice. As Harris noted in his speech, X-BAT can attack targets “even if the comms link is severed,” meaning that a human could not intervene in the moment. Further complicating things is the fact that, as Tseng told RS, some decisions about targeting will be “made before operations,” which suggests limited visibility into the final steps of an attack.

The biggest question hanging over all of this is what happens if the technology is used in an urban environment rather than the open ocean, where a U.S.-China conflict is most likely to play out. In Gaza, Israel has used AI to develop massive lists of targets for bombings. While that system has a human directly in the loop — and requires sending an actual pilot to carry out the strike — critics say it allowed the Israel Defense Forces to justify a scorched-earth bombing campaign despite limited evidence about the guilt of its targets.

One source told 972 Magazine that Israeli officers would simply “rubber stamp” targets after roughly 20 seconds of review. So what happens if this cursory review is replaced with passive oversight? If ShieldAI gets its way, then we may well know the answer soon.

- How VC is busting the Military Industrial Complex — for its own benefit ›

- Imagine killer AI robots in Gaza, in the Donbas ›